In the rapidly evolving landscape of generative AI, the ability to craft effective prompts has emerged as a crucial skill. Prompt engineering—the art and science of formulating instructions for AI models—can be the difference between receiving mediocre outputs and generating remarkably useful content. This blog explores the fundamentals of prompt engineering and provides practical strategies to enhance your interactions with AI models.

Understanding the Prompt-Response Relationship

At its core, prompt engineering is about clear communication. AI models interpret your instructions through the lens of their training data and respond based on patterns they’ve learned. Think of prompts as conversations with a highly knowledgeable but extremely literal colleague who needs precise instructions to deliver what you need.

Key Principles for Effective Prompts

Be Specific and Clear

Vague prompts yield vague responses. Instead of asking “Tell me about cloud computing,” try “Explain the key differences between IaaS, PaaS, and SaaS in cloud computing, with one example of each.” The specificity guides the model toward the exact information you seek.

Provide Context

AI models lack the situational awareness humans naturally possess. Providing relevant context helps the model generate more appropriate responses. For instance, specify whether you’re writing for technical experts or beginners, or include background information about a specific domain.

Structure Your Requests

Breaking complex requests into structured components helps models organize their responses. Consider using numbered points or clear sections in your prompt. For example: “Create a product description for a wireless headset. Include: 1) Key technical specifications, 2) Three main benefits, and 3) Target audience.”

Set the Tone and Format

Models can adapt to different communication styles, but you need to specify what you want. Explicitly request formal or conversational tone, technical or simplified language, or specific output formats like bullet points, paragraphs, or code snippets.

Advanced Techniques

Role Prompting

Assigning a role to the model can dramatically improve outputs for specialized tasks. For example: “As an experienced software architect, review this system design and identify potential scalability issues.” This technique leverages the model’s understanding of how different professionals approach problems.

Few-Shot Learning

Providing examples within your prompt demonstrates the pattern you want the model to follow. For instance, if you want a specific question-answer format, include 2-3 examples before asking for additional responses. This shows rather than tells the model what you expect.

Iterative Refinement

Prompt engineering is rarely perfect on the first try. Start with a basic prompt, evaluate the response, then refine your instructions based on what worked and what didn’t. This iterative approach leads to increasingly better results.

Common Pitfalls to Avoid

Overcomplicating Instructions

While details help, excessive complexity can confuse models. Balance specificity with clarity, focusing on essential requirements rather than overwhelming the model with constraints.

Assuming Technical Understanding

Even advanced models may struggle with highly specialized jargon without proper context. Define unusual terms or provide references when working in niche domains.

Neglecting Ethical Boundaries

Consider the ethical implications of your prompts. Responsible prompt engineering involves respecting privacy, avoiding harmful outputs, and ensuring factual accuracy.

Practical Applications

Effective prompt engineering enhances numerous workflows:

- Content creation becomes more efficient when you precisely specify tone, audience, and purpose

- Technical documentation can be drafted faster with well-structured prompts about functionality and use cases

- Problem-solving benefits from prompts that clearly define constraints and desired outcomes

- Learning new concepts improves when you structure prompts to build on your existing knowledge

Advanced Prompt Engineering Techniques

Building on our previous discussion about prompt engineering basics, let’s explore more sophisticated techniques that can significantly enhance your interactions with AI models.

Prompt Patterns and Templates

Beyond the basic principles we’ve already covered, several powerful prompt patterns can yield better results:

Chain-of-Thought Prompting

Guide the model through step-by-step reasoning by explicitly asking it to “think through this step by step” or “reason through this problem.” This technique dramatically improves performance on complex reasoning tasks by encouraging the model to break down problems into manageable components.

e.g.- “Analyze the impact of this company’s recent 15% revenue increase alongside a 20% increase in operating expenses. Think through each step to determine if this represents a positive or negative trend for overall profitability.”

Tree-of-Thought Prompting

Similar to chain-of-thought, but explores multiple reasoning paths simultaneously. Ask the model to consider different approaches to a problem and evaluate the merits of each before arriving at a conclusion.

e.g.- “We need to determine whether to launch our product in Market A or Market B. Consider the following decision paths: Path 1: Analyze based on potential market size and growth rate Path 2: Analyze based on existing competition and barriers to entry Path 3: Analyze based on our company’s current capabilities and resources For each path, identify the key considerations, evaluate the pros and cons, and then synthesize these analyses to recommend the optimal market entry strategy.”

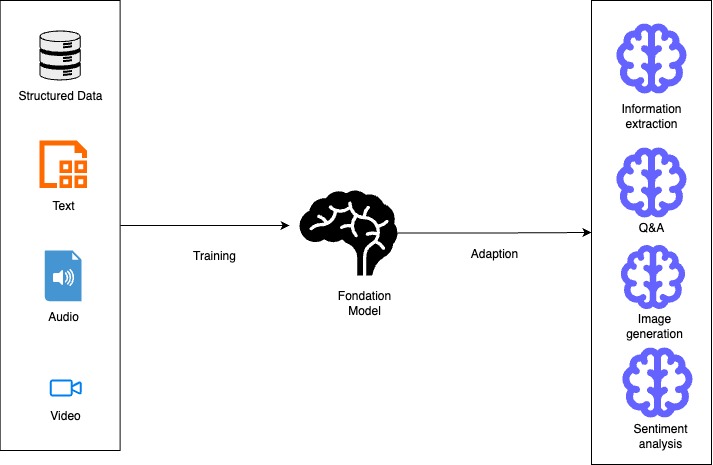

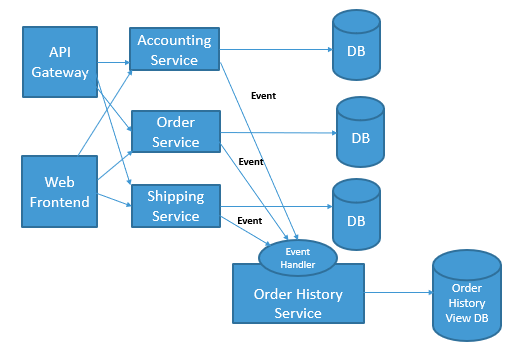

Retrieval Augmented Generation (RAG)

When working with domain-specific knowledge, provide relevant information within your prompt. This creates context that helps the model generate more accurate and informed responses.

How RAG Works

RAG combines two fundamental processes:

- Retrieval: A semantic search mechanism identifies relevant content from curated knowledge sources such as internal documents, product manuals, or case logs. This typically leverages vector embeddings to find contextually relevant information.

- Generation: The retrieved context is provided as part of the prompt to the LLM, allowing it to craft an answer grounded in that authoritative information.

This two-step process enables “closed-book” foundation models to act as if they had access to your live, curated enterprise data, without requiring model retraining.

Zero-shot, One-shot, and Few-shot Learning

These refer to providing different levels of examples:

- Zero-shot: No examples provided, just instructions

- One-shot: A single example before your request

- Few-shot: Multiple examples establishing a pattern

Advanced Control Techniques

Control Max Token Length

Set explicit limits on response length either in your configuration or directly in your prompt. For example: “Explain quantum computing in exactly three paragraphs” or “Provide a 50-word summary.”

Use Variables in Prompts

Create reusable prompt templates with placeholders that can be filled with different inputs. This is particularly valuable when building applications that interact with AI models repeatedly.

Request Structured Output

When you need data in a specific format, explicitly request it. For example:

“Provide your analysis in JSON format with the following fields: key_findings, recommendations, and risk_level.”

Optimization Strategies

Experiment and Iterate

The most successful prompt engineers continuously refine their approaches. Track what works, what doesn’t, and systematically improve your prompts through deliberate experimentation.

Adapt to Model Updates

As AI models evolve, prompting strategies should too. Techniques that work well on one version may need adjustment for newer versions.

Document Your Experiments

Maintain records of your prompt attempts, configurations, outputs, and observations. This documentation helps identify patterns and refine strategies over time.

Collaborative Prompting

Exchange ideas with other prompt engineers. Different perspectives often lead to innovative approaches that may not have occurred to you individually.

Advanced Applications

Meta-Prompting

Ask the model to help improve your prompts. For example: “How would you modify this prompt to get better results for [specific task]?”

System and User Role Definition

Clearly define the role of both the model and yourself in the interaction. This establishes expectations and guides the model’s response style.

Temperature and Sampling Controls

Adjust these parameters to balance creativity versus determinism in responses. Lower temperature settings (closer to 0) produce more consistent and predictable outputs. Higher values (closer to 1) increase randomness and creativity.

Incorporate these advanced techniques into your prompt engineering toolkit. You’ll manage to achieve more precise results from AI models. The outcomes will be useful and reliable across a wide range of applications.

Temperature Controls

Temperature is a scaling factor applied within the final softmax layer of an AI model that directly affects the randomness of outputs. It works by influencing the shape of the probability distribution that the model calculates:

Temperature typically ranges from 0 to 1, with each setting producing dramatically different results:

Lower Temperature (0 ~ 0.5) :

- Creates a more peaked probability distribution

- Concentrates probability in fewer words

- Produces more predictable, deterministic outputs

- Ideal for factual, precise responses where consistency matters

Higher Temperature (~ 1):

- Softens the probability distribution, making it more uniform

- Gives lower probability tokens a higher chance of being selected

- Generates more diverse, creative, and sometimes unexpected responses

- Better for creative writing, brainstorming, or generating varied options

For example, at a very low temperature, if the model predicts the next word in “A golden ale on a summer ___” with probabilities for “day” (30%), “night” (10%), and “moon” (5%), it would almost always choose “day.” At higher temperatures, “night” and “moon” become increasingly likely to be selected.

Sampling Methods

While temperature controls the probability distribution, sampling methods determine how tokens are actually selected from that distribution:

- Greedy Sampling: Always selects the highest probability token (effectively what happens at very low temperatures)

- Random Sampling: Selects tokens based on their probability weightings, introducing variability

- Top-p (Nucleus) Sampling: Only considers the smallest set of tokens whose cumulative probability exceeds a threshold p

- Top-k Sampling: Only considers the k most likely next tokens

These controls are typically exposed through API parameters when working with generative AI models. For network engineers and other technical professionals using these models, understanding these parameters helps optimize results for specific use cases.

The default temperature value is typically 1.0, representing a balance between deterministic and creative outputs.

Conclusion

Prompt engineering is rapidly becoming an essential skill in our AI-augmented world. By applying these fundamental principles, you can significantly improve your interactions with AI models, transforming them from interesting novelties into powerful productivity tools that enhance your work and creative endeavors.

As you continue experimenting with different prompting techniques, you’ll develop an intuitive sense for what works best for various tasks and models. This skill will only grow more valuable as AI capabilities continue to advance.

Issues and Considerations

Issues and Considerations